Assessment of your Qlik Sense Environment

In my last blog “Why Move to Qlik SaaS“, I talked about three main things.

Realtime Scalable Data Pipelines

Leverage the scalability of cloud DW for Transformations with ELT

Cloud based ‘pay as you use’ Data Warehouse/lake

Next Gen BI that goes much beyond reports

No Code AI/ML for Business Analysts to leverage data science and gain insights

Scale your Enterprise with error free processes

Create implementable Strategy for your environment to generate value by leveraging data assets

In my last blog “Why Move to Qlik SaaS“, I talked about three main things.

Proactively Manage Qlik Performance and User Experience

Validate Access and manage Compliance for Qlik dashboards

Drive BI Adoption, increase Productivity and enable Proactive action

In my last blog “Why Move to Qlik SaaS“, I talked about three main things. Considerations, Benefits, and Cautions. In this blog, I will help

Challenges: Extracting base reports from ERP was a manual process that involved collating, updating and

Realtime Scalable Data Pipelines

Leverage the scalability of cloud DW for Transformations with ELT

Cloud based ‘pay as you use’ Data Warehouse/lake

Next Gen BI that goes much beyond reports

No Code AI/ML for Business Analysts to leverage data science and gain insights

Scale your Enterprise with error free processes

Create implementable Strategy for your environment to generate value by leveraging data assets

In my last blog “Why Move to Qlik SaaS“, I talked about three main things.

Proactively Manage Qlik Performance and User Experience

Validate Access and manage Compliance for Qlik dashboards

Drive BI Adoption, increase Productivity and enable Proactive action

In my last blog “Why Move to Qlik SaaS“, I talked about three main things. Considerations, Benefits, and Cautions. In this blog, I will help

Challenges: Extracting base reports from ERP was a manual process that involved collating, updating and

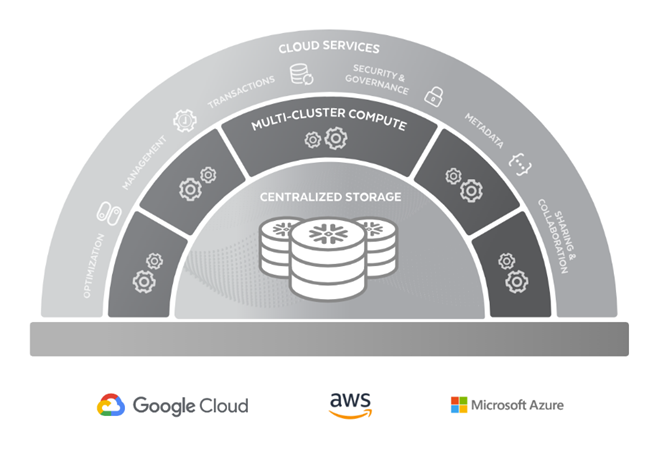

High performing scalable Data warehouse at the right cost for the use case matters. Traditionally data warehouses have been sized by the amount of data stored and the cost was heavily influenced by the data size. Snowflake Architecture brought a paradigm shift in this thinking where it separated the compute from the storage.

This was possible due to 2 key factors:

As a result, you can store as much data as you like and still get charged very less if your usage is low.

Snowflake is designed on top of the cloud computing infrastructure provided by Google, Microsoft Azure, and Amazon Web Services. It’s perfect for enterprises who don’t want to provide resources for setup, maintenance, and support of internal servers because there isn’t any hardware or software to choose, install, configure, or manage. And any ETL or ELT tool may be used to transfer data easily into Snowflake. Snowflake enables data storage, processing, and analytic solutions that are faster, easier to use, and far more flexible than traditional offerings.